You can find the source code on GitHub here.

Introduction

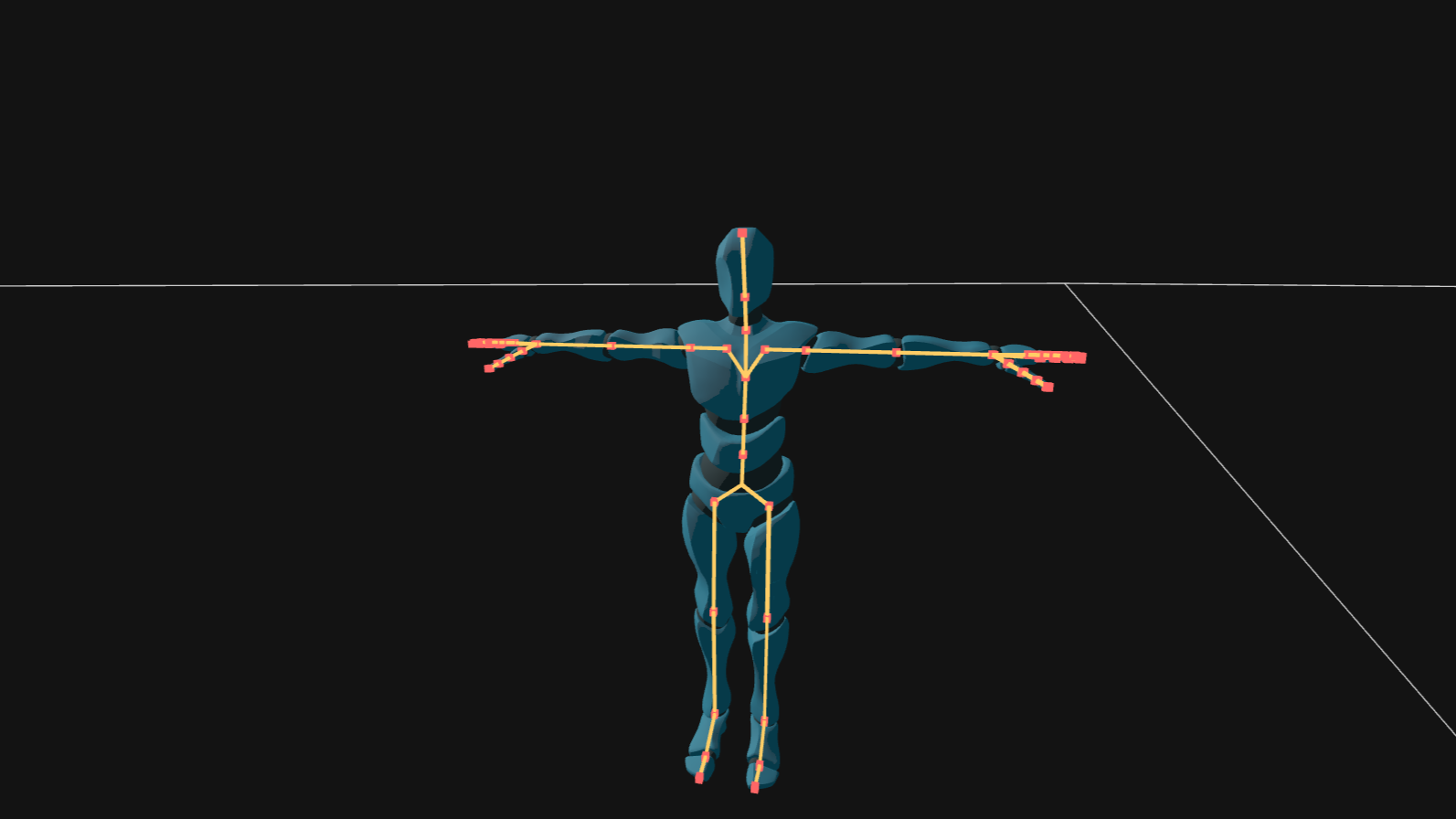

Making an animation system from scratch can be challenging, but there are many resources that explain how skeletal animation works very well and I referenced some of them at the end. I will only mention some key things that helped me (mostly about exporting from Blender).

Blender Exporter

Animations are likely authored in 3D software like Maya or Blender. Blender doesn’t require a license, so I used that.

Dealing with Blender’s API was probably the toughest part about this project; it took time to figure out how to retrieve the information I needed.

I wrote two python scripts, each exports a custom file format:

-

(

.meshfile): contains mesh info and skeleton/armature info (if skeleton exists). Mesh info is basically vertices and material info, and the skeleton info contains joint names, parent_ids, “inverse bind pose matrices” and vertex weights. -

(

.sampled_animationfile): Contains meta-data about exported animation like frame-rate and number of frames, and it contains the joint matrices at every frame of the animation. Blender has something called “actions” which are basically different animations. For example, your character can have and idle animation, a walking animation, and a running animation; each is a different “action”, and each will be exported to a separate.sampled_animationfile.

Some general notes

-

Names of coordinate systems and spaces are confusing; each space is often referred to in multiple ways. So, every time I mention a space, I will also mention its other names. This is of course according to my understanding.

-

The whole process of animating a mesh in a nutshell goes like this: we have vertices in mesh space (object/model space). Just as we usually transform these vertices in the vertex shader using an object_to_projection_matrix (a.k.a MVP matrix), we want to transform these vertices every frame to animate them by rotating/translating in the same mesh space, and THEN we apply the object_to_projection_matrix. These transforms are called “skinning matrices”. The skeleton is made up of joints (or bones) and vertices are attached to multiple joints (typically 4). We transform the joints when animating and the vertices follow.

-

Animations are typically exported at (24, 30, 60 FPS) and games often run at much higher FPS, so we’ll need to interpolate between exported frames to get smooth animation. We call these exported frames “samples” or “keyframes”.

-

When interpolating between samples, it’s best to keep the joint transforms decomposed, i.e., translation, rotation, and scale are stored separately. Lerping each component will give us better result than lerping entire 4x4 matrices. Also, the joint transforms should be in local-space (relative to the joint’s parent) when lerping. Because lerping in object-space (mesh/model/global space), the results won’t be as good.

-

Blender usually gives us the joint matrices relative to the ARMATURE object. We care about matrices only in local-space (relative to parent) and in object-space (mesh/model/global space). To solve this, we just make sure that the ARMATURE object and the MESH object have the same origin in Blender and we’re good to go (do

CTRL+A -> Apply All Transformsfor both MESH and ARMATURE objects). -

Some joint’s “inverse bind pose matrix” or “offset matrix” is just a matrix that transforms mesh vertices from object space (mesh/model/global space) to the joint’s local space (relative to parent).

Blender’s bone matrices

To extract what you need from Blender, here is the description of matrices for some joint called “Torso” of some skeleton/armature called “Armature”:

# "object space" is where mesh vertices exist (MESH object origin), a.k.a. "model space" or "global space in context of joints".

# "armature space" is where joint matrices often exist (AMATURE object origin).

# This exporter _requires_ that armature origin and mesh origin are the same (object space == armature space).

# Gives the joint's current pose matrix(4x4) in armature space.

bpy.data.objects['Armature'].pose.bones["Torso"].matrix

# To get the joint's current pose matrix relative to the parent, we do the following

m_child = bpy.data.objects['Armature'].pose.bones["Torso"].matrix

m_parent = bpy.data.objects['Armature'].pose.bones["Torso"].parent.matrix

result = m_parent.inverted() @ m_child

# Gives the joint's rest pose matrix in armature space.

# Both are the same.

bpy.data.objects['Armature'].pose.bones["Torso"].bone.matrix_local

bpy.data.objects['Armature'].data.bones["Torso"].matrix_local

# Gives the joint's current pose matrix(4x4) relative to the same joint in rest pose.

# Translation part of the matrix is zero (as we can't translate joints in pose mode? We don't actually use this for anything).

bpy.data.objects['Armature'].pose.bones["Torso"].matrix_basis

# Gives the joint's rest pose matrix(3x3) relative to parent (no translation)

bpy.data.objects['Armature'].data.bones["Torso"].matrix

For the mesh exporter, we mainly care about the data.bones["Torso"].matrix_local.inverted() to transform vertices from object-space to joint-local space. This is known as the “inverse bind pose matrix” or the “offset matrix”.

For the animation exporter, we care about the joint’s current pose matrix relative to the parent (see above how we retrieve it).

Structure

I often struggle with architecting code so I try to look for examples from real games on how they do things. Below is a link for an extremely useful series on how animation systems are implemented in games. You can have multiple data structures that are responsible for updating and playing back the animations:

- A structure responsible for loading the

.sampled_animationfile and storing joint transforms at every sample. - A structure that works on top of the sampled animation structure and is updated every frame by advancing the animation by fixed delta_time, seeking within the sampled animation to see between which two samples we lie, and finally lerping between the two samples to get an in-between transform for each joint.

- To blend/cross-fade between different animations, we can have a separate structure that works on top of the second one.

- You can have a higher level structure that acts as a finite-state machine which queues and transitions between animations.

References and Resources

- The rig is from Mixamo.

- Read about Rigify Blender Addon for rigging meshes.

- Skeletal Animation in OpenGL - good video explanation.

- LearnOpenGL Skeletal Animation - good read.

- Jonathan Blow Animation Groundwork - for learning about a real world implementation.

- Jason Gregory’s Game Engine Architecture - Chapter 11. Animation Systems.